Table of Contents

preprint: https://arxiv.org/abs/2505.04457

Abstract

Training data cleaning is a new application for generative model-based speech restoration (SR). This paper introduces Miipher-2, an SR model designed for million-hour scale data, for training data cleaning for large-scale generative models like large language models. Key challenges addressed include generalization to unseen languages, operation without explicit conditioning (e.g., text, speaker ID), and computational efficiency. Miipher-2 utilizes a frozen, pre-trained Universal Speech Model (USM), supporting over 300 languages, as a robust, conditioning-free feature extractor. To optimize efficiency and minimize memory, Miipher-2 incorporates parallel adapters for predicting clean USM features from noisy inputs and employs the WaveFit neural vocoder for waveform synthesis. These components were trained on 3,000 hours of multilingual, studio-quality recordings with augmented degradations, while USM parameters remained fixed. Experimental results demonstrate Miipher-2 is superior or comparable performance to conventional SR models in word-error-rate, speaker similarity, and both objective and subjective sound quality scores across all tested languages. Miipher-2 operates efficiently on consumer-grade accelerators, achieving a real-time factor of 0.0078, enabling the processing of a million-hour speech dataset in approximately three days using 100 lite accelerators.

Figure 1: Miipher-2 Architecture

LibriTTS (Miipher-1 vs Miipher-2)

This section shows samples of the objective experiments. We evaluated the effectiveness of Miipher-2 using USM and other methods on LibriTTS [1].

Each table includes the raw LibriTTS input, URGENT2025 [2] baseline TF-GridNet output, Miipher-1 [3] (monolingual with text/speaker conditioning) outputs from LibriTTS-R [4], and our propsoed Miipher-2 (multilingual without text/speaker conditioning) outputs.

Comparing to TF-GridNet, Miipher-1 and our Miipher-2 work very noisy and reverberant environments. In addtion, Miipher-2 can preserve more speaker characteristics and time-frequency structures than Miipher-1.

|

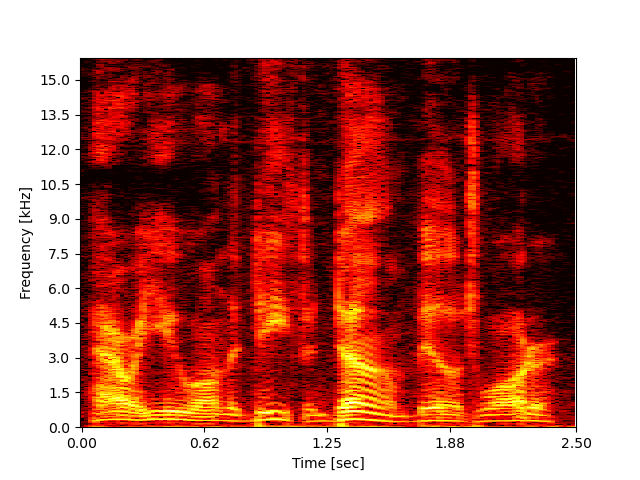

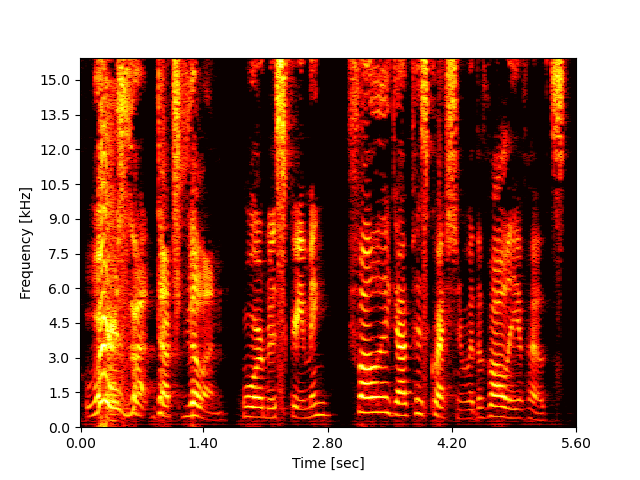

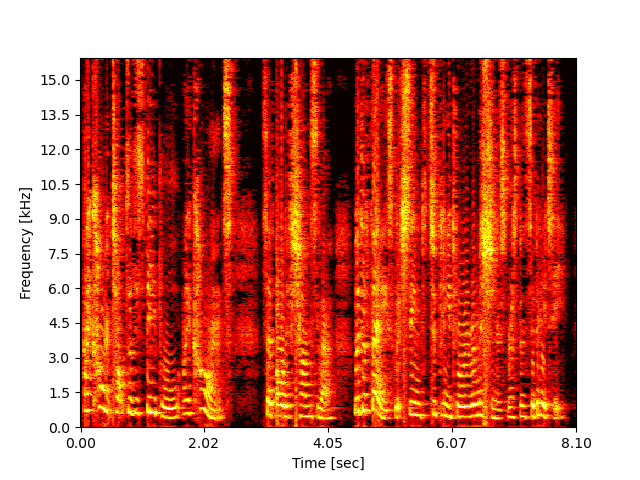

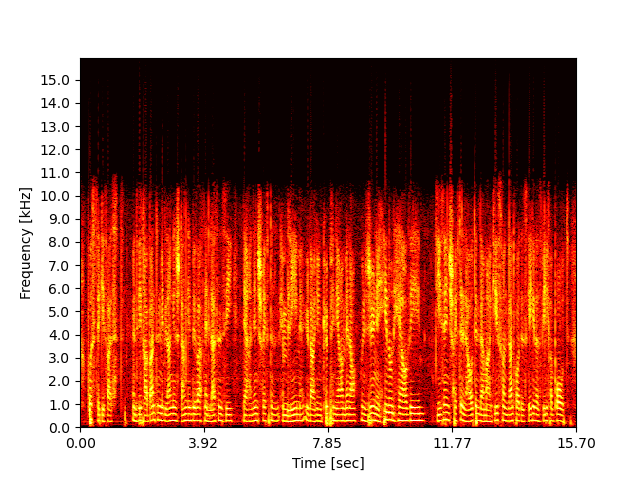

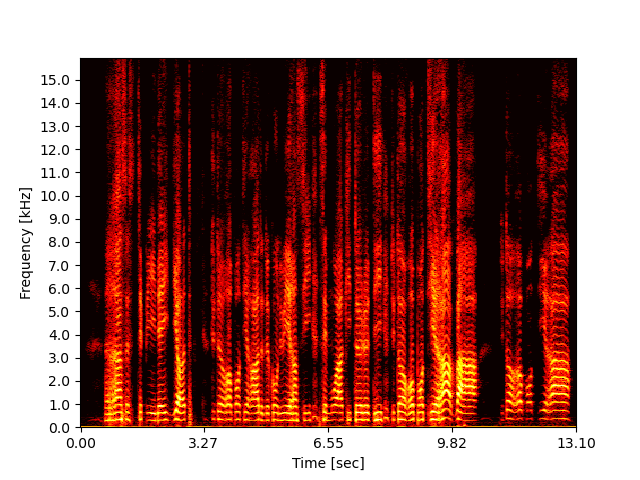

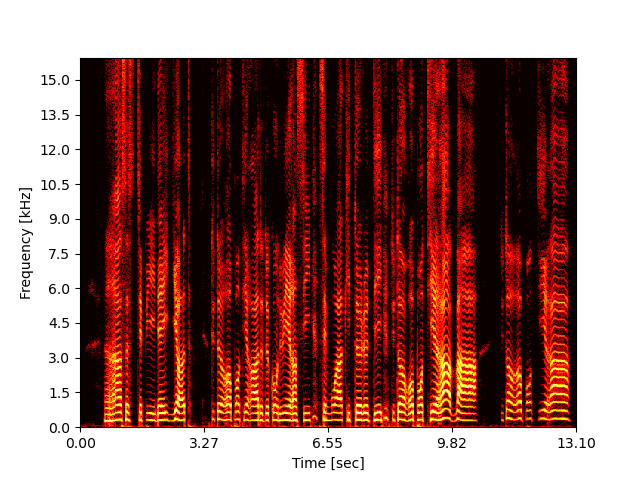

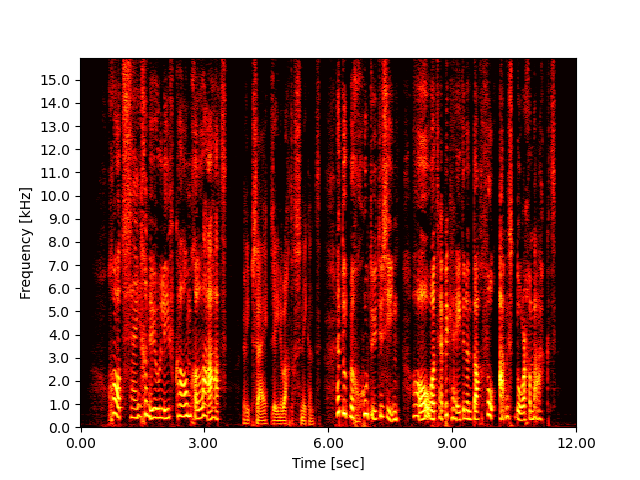

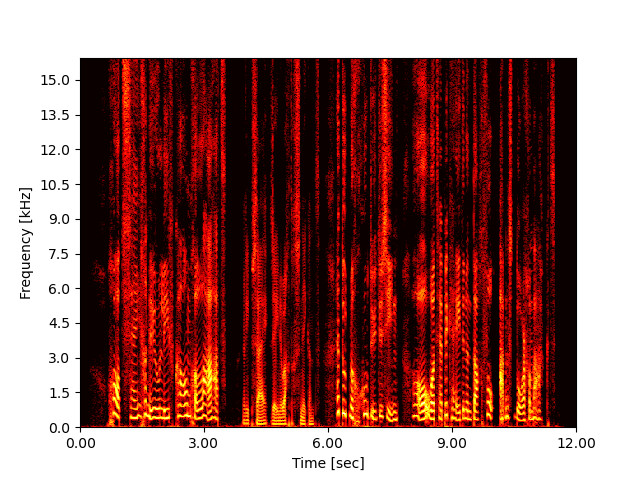

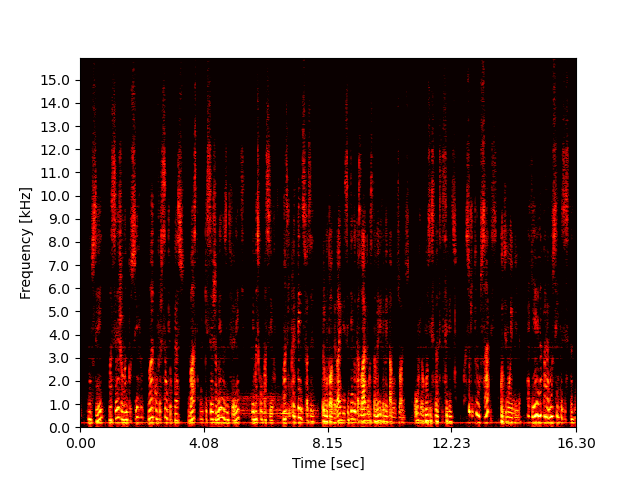

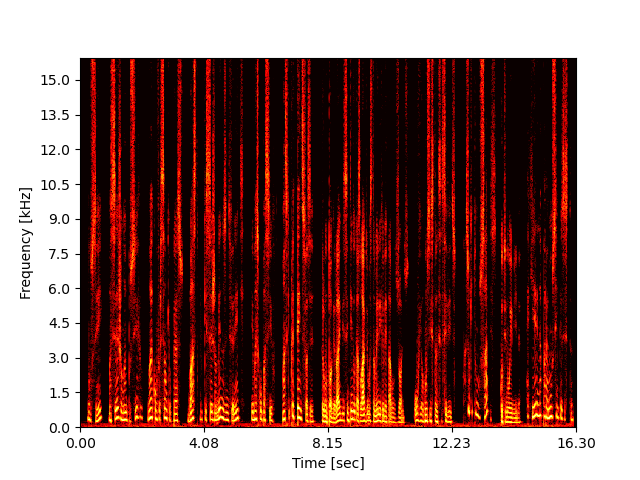

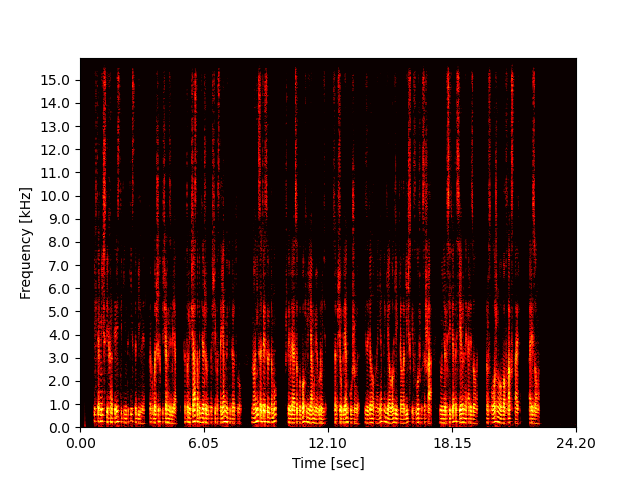

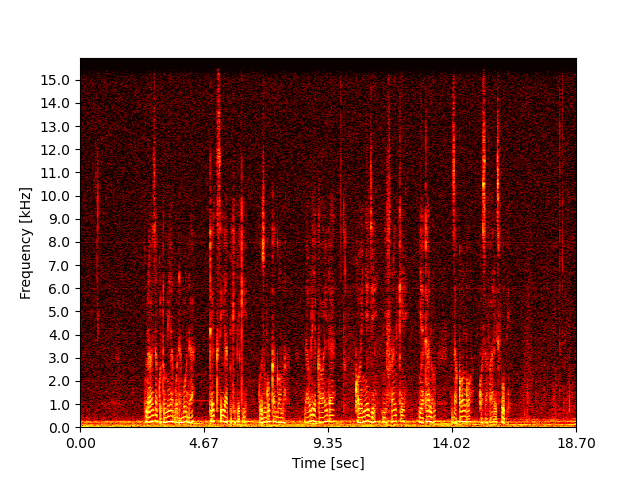

Example 3005_163391_000039_000000:

"How are you on the deef and dumb, Bilgewater?"

NOTE: Miipher-2 can restore the noisy input in various intonation by its multilingual capability while Miipher-1 could not preserve the original English intonation in this case. |

|||

|

LibriTTS input

|

TF-GridNet

|

Miipher-1

|

Miipher-2 (ours)

|

|---|---|---|---|

|

|

|

|

|

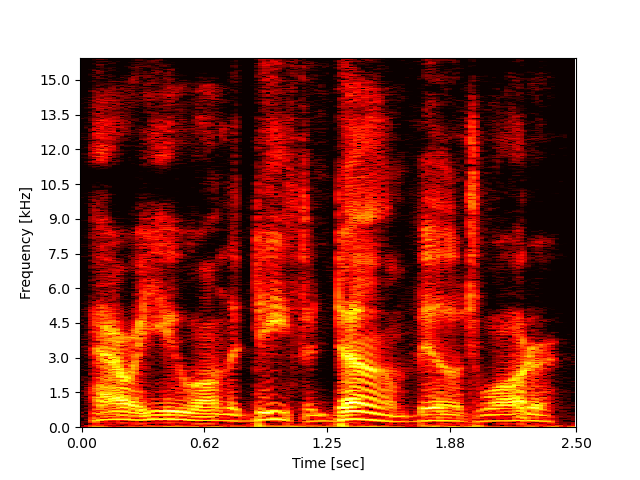

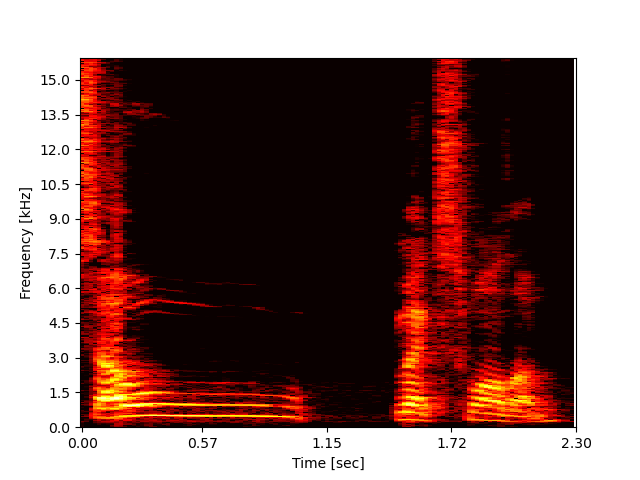

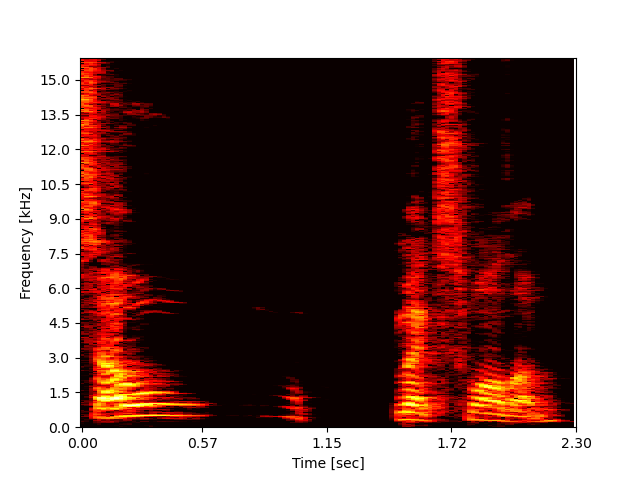

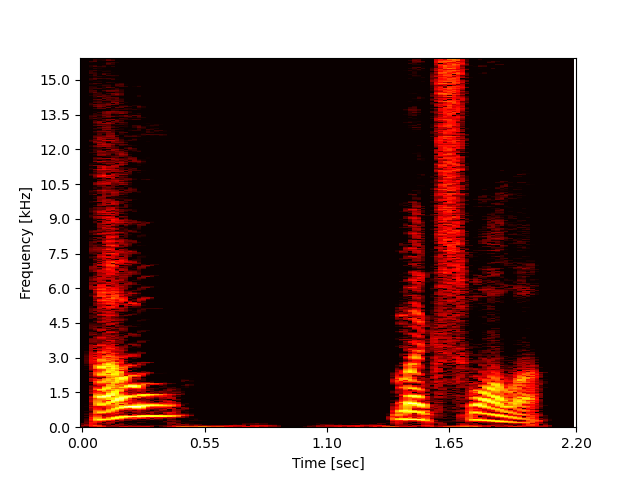

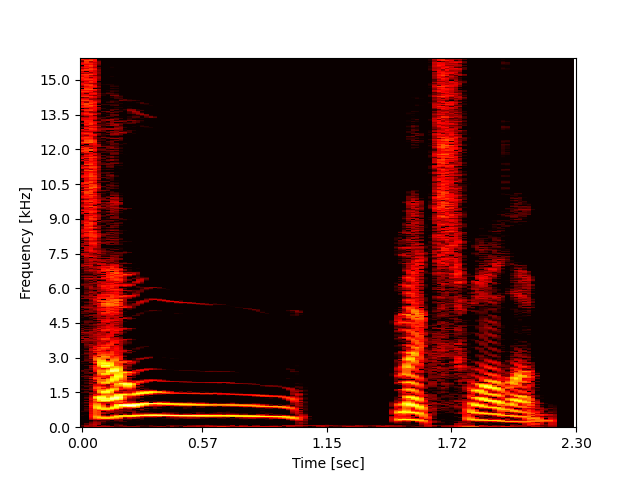

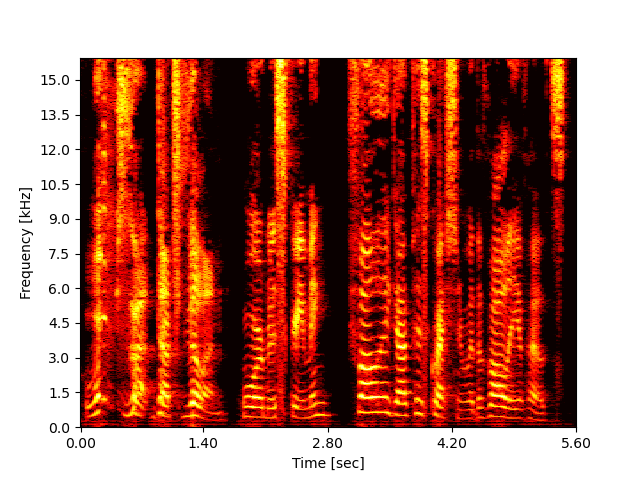

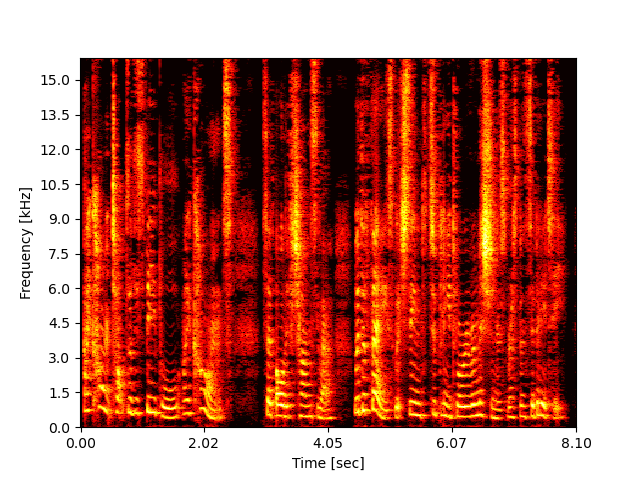

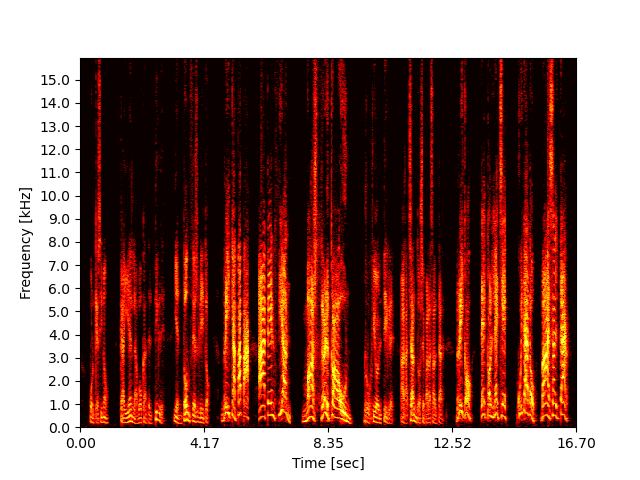

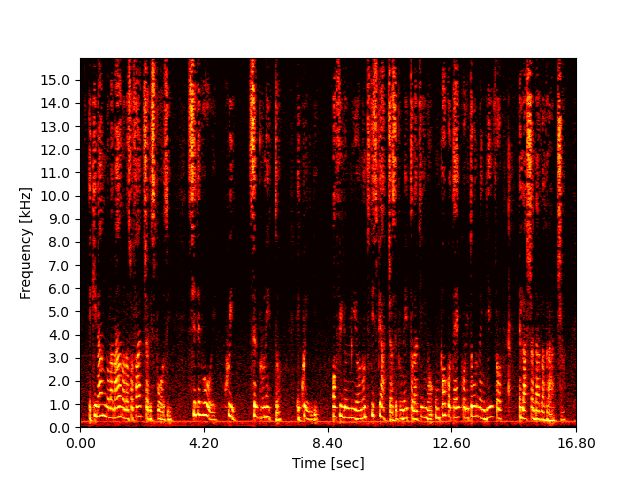

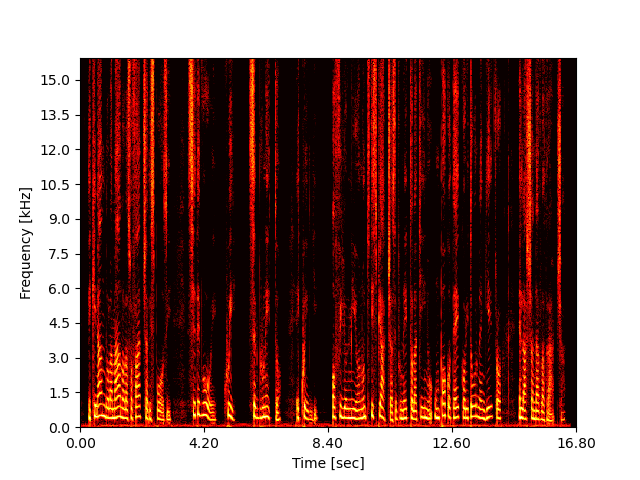

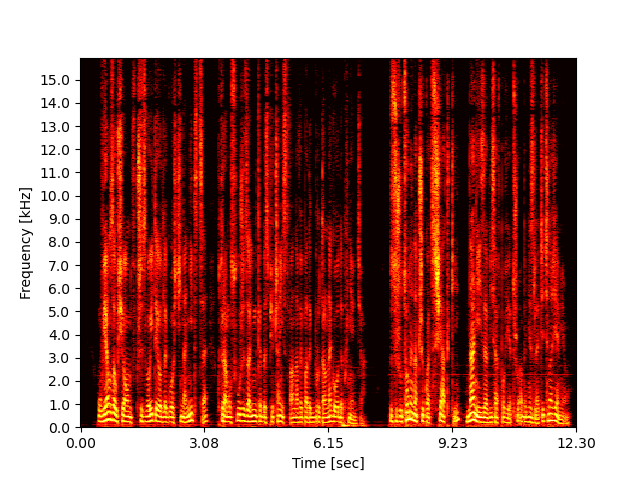

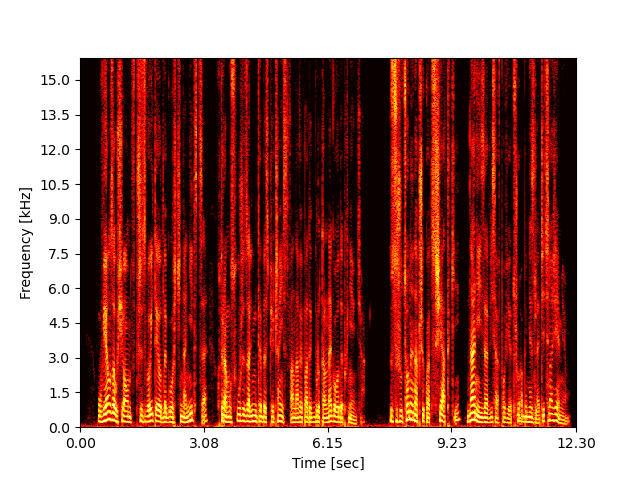

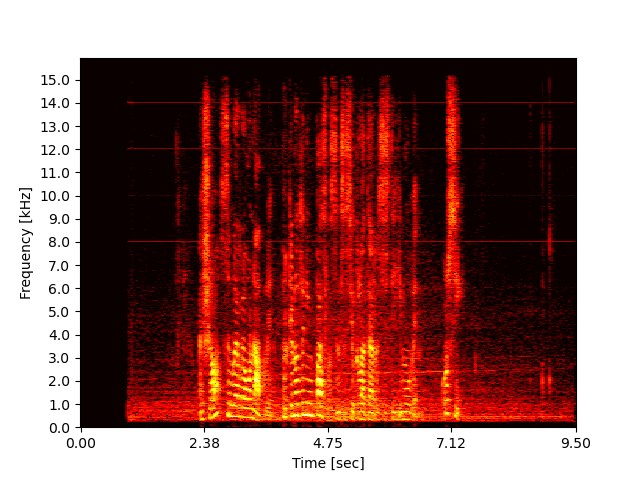

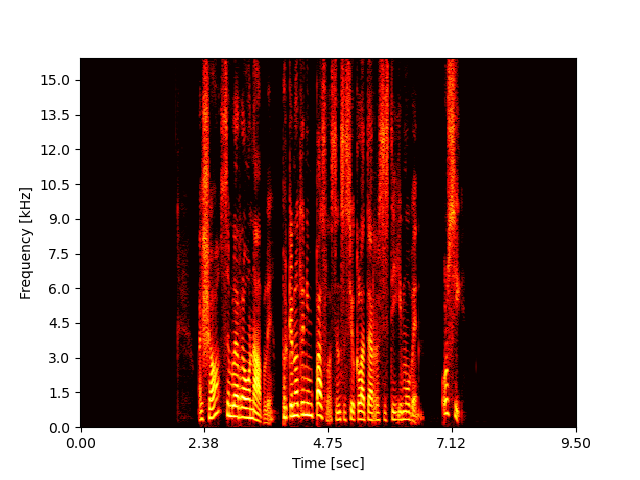

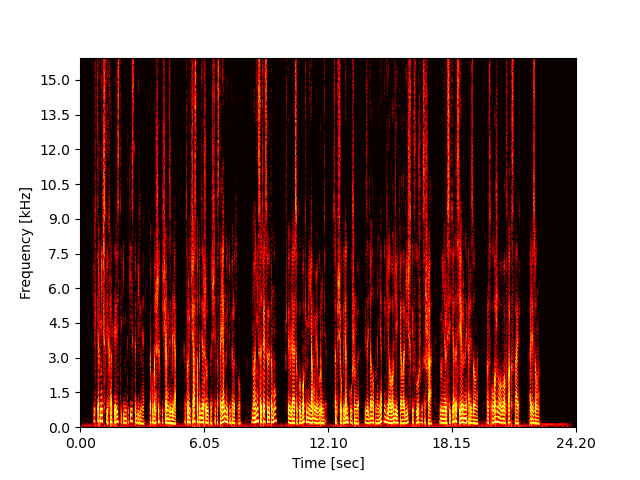

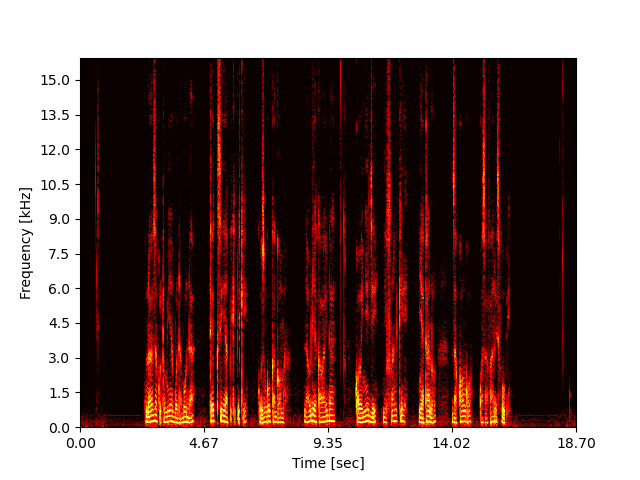

Example 4852_28311_000006_000000:

"So-" Mike swallowed.

NOTE: Miipher-2 could restore the word of "So-", while Miipher-1 changed it a lot and TF-GridNet also stuttered the word. |

|||

|

LibriTTS input

|

TF-GridNet

|

Miipher-1

|

Miipher-2 (ours)

|

|---|---|---|---|

|

|

|

|

|

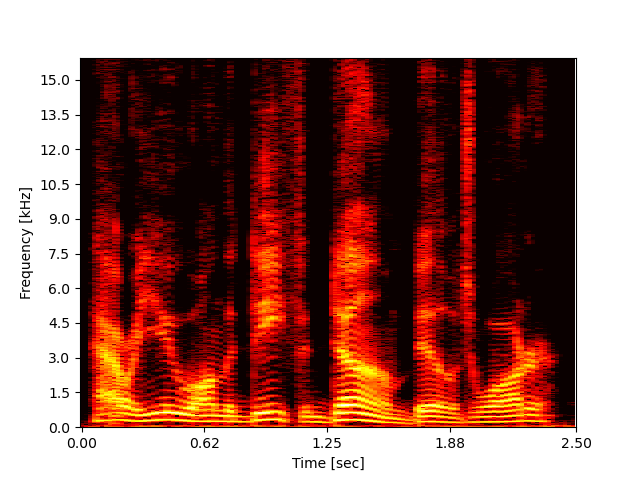

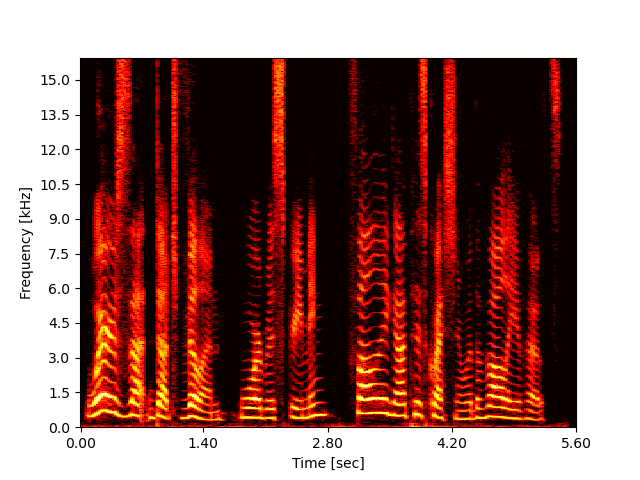

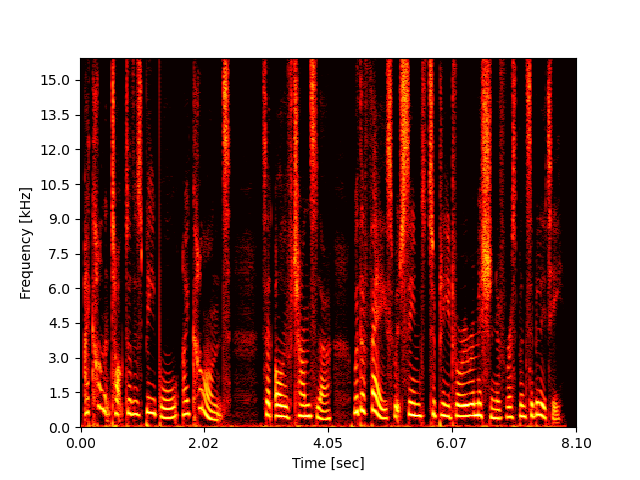

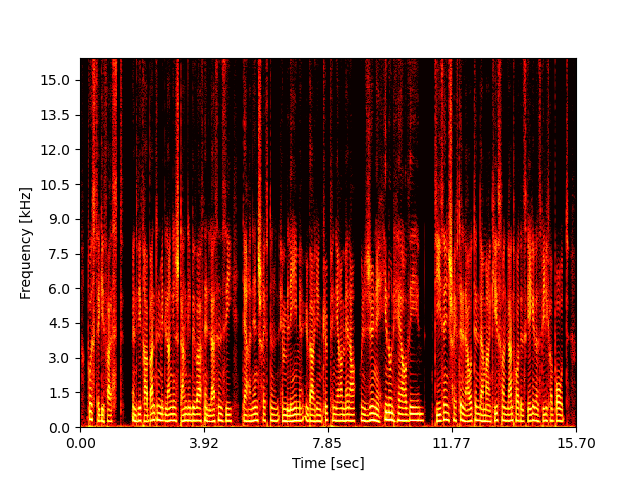

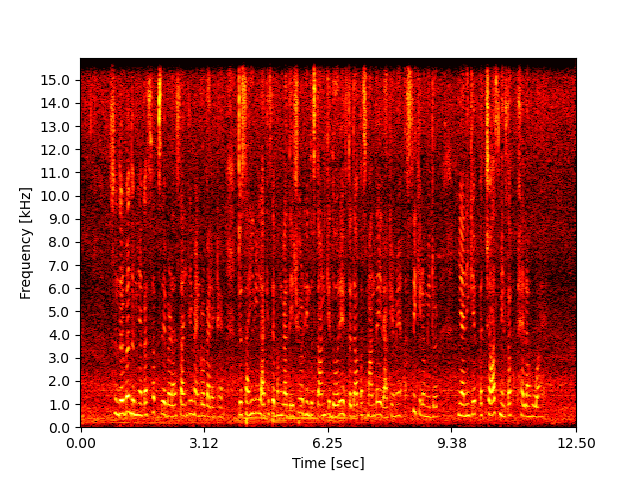

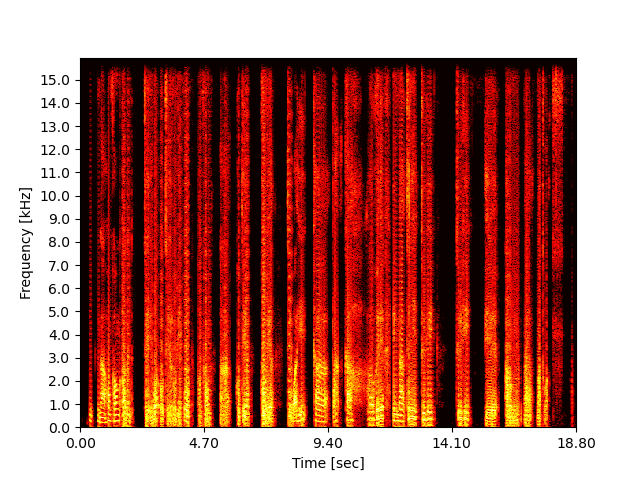

Example 8280_266249_000082_000000:

"Where's that Dutch villain?" Ward was screaming, following up his question with a volley of oaths.

NOTE: Miipher-2 could restore emotional speech while Miipher-1 completely changed the speaker and style. |

|||

|

LibriTTS input

|

TF-GridNet

|

Miipher-1

|

Miipher-2 (ours)

|

|---|---|---|---|

|

|

|

|

|

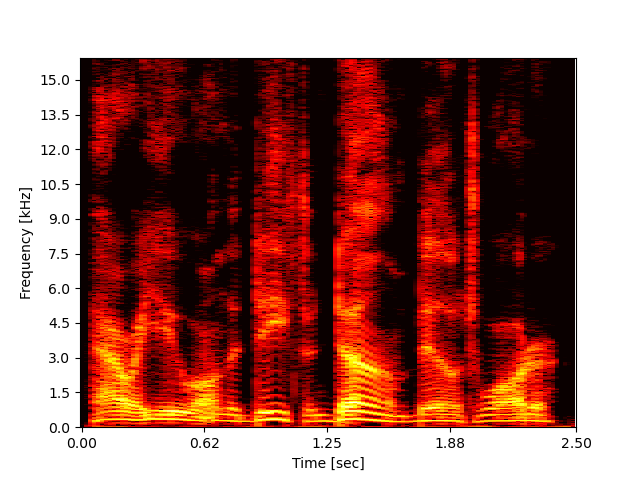

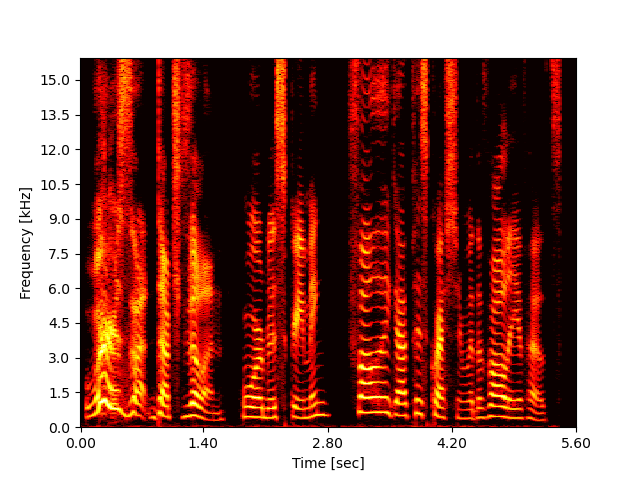

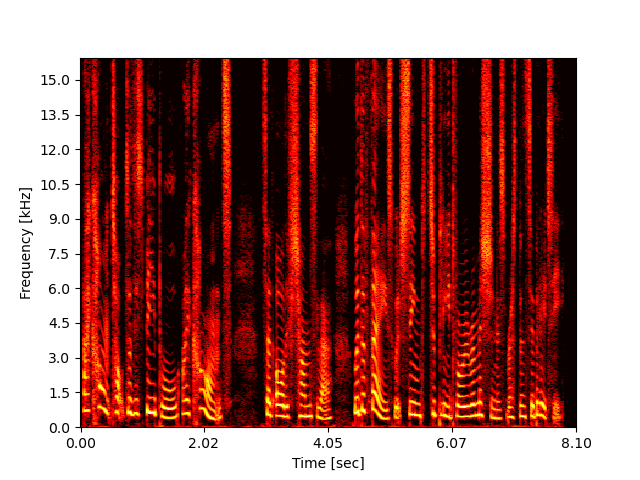

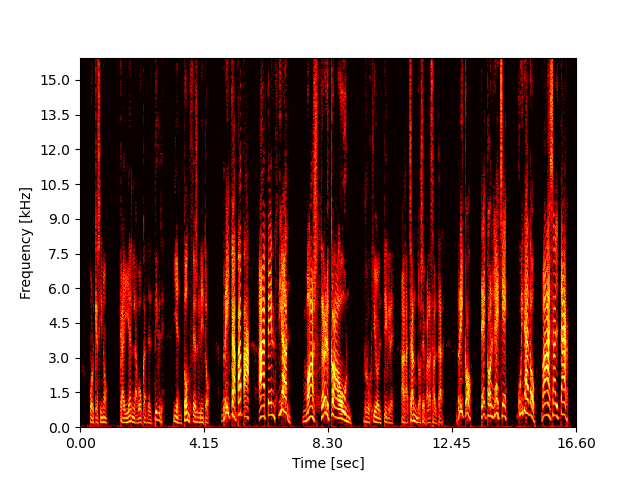

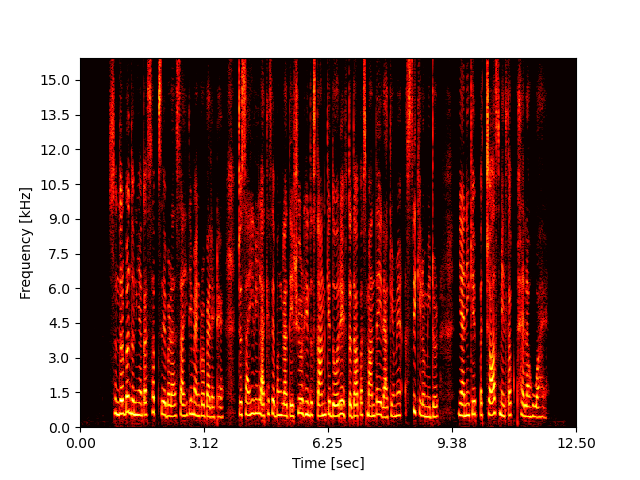

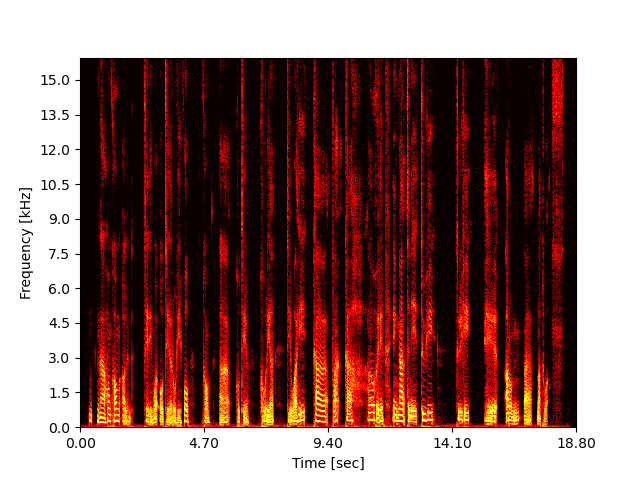

Example 6128_63244_000002_000000:

"I can't talk to those people, I can't!" said Olive Chancellor, with a face which seemed to plead for a remission of responsibility.

NOTE: Miipher-1 output sometimes sounds robotic (vocoder-ish) when the input is very breathy. |

|||

|

LibriTTS input

|

TF-GridNet

|

Miipher-1

|

Miipher-2 (ours)

|

|---|---|---|---|

|

|

|

|

Multilingual LibriSpeech (known languages)

This section shows samples of the subjective experiments on mulitlingual librispeech (MLS) [5], which has languages overlapped with our finetuning dataset.

|

Example de_de 1844_931_000007:

poste gefasst und wie trabanten mit langen stangen bewaffnet lassen sie deren inschriften embleme über den köpfen ihrer männer und söhne hin und her wehen diese inschriften lauten der marquis von blandford ist gegen das billige brot

NOTE: This output demonstrated that Miipher-2 can recover bandlimited and noisy background recording. |

|

|

MLS input

|

Miipher-2 (ours)

|

|---|---|

|

|

|

Example es_es 10667_6706_000019:

porque si no caía en la boca del tigre y entonces gritó rica papa atención más cerca aún rugió el tigre agachándose para saltar rico té con leche cuidado va a saltar

NOTE: It is difficult to find noisy es_es samples because es_es showed the highest DNSMOS and SQuID quality scores in the MLS. |

|

|

MLS input

|

Miipher-2 (ours)

|

|---|---|

|

|

| Example fr_fr 2223_1745_000094: race stupide et idiote tu te repentiras de te conduire ainsi c'est moi qui te le dis tu t'en repentiras va tu t'en repentiras | |

|

MLS input

|

Miipher-2 (ours)

|

|---|---|

|

|

| Example it_it 280_529_000090: e chinando la mano a la sua faccia rispuosi siete voi qui ser brunetto e quelli oh figliuol mio non ti dispiaccia se brunetto latino un poco teco ritorna indietro e lascia andar la traccia | |

|

MLS input

|

Miipher-2 (ours)

|

|---|---|

|

|

| Example nl_nl 4429_3991_000055: god zegene uw lief kalm gelaat riep zij zenuwachtig snikkend het doet mij goed u te zien o wat heb ik heden een dag vol angst doorgemaakt | |

|

MLS input

|

Miipher-2 (ours)

|

|---|---|

|

|

| Example pl_pl 9098_8338_000085: uwiązał się on w przyzwoitej odległości na nitce która mu służy za linkę bezpieczeństwa na wypadek brutalnego odepchnięcia zrzucony na przykład z siatki na niej zawisa w powietrzu aby nie zlecieć na ziemię | |

|

MLS input

|

Miipher-2 (ours)

|

|---|---|

|

|

|

Example pt_pt 5739_4739_000087:

não sei mas seja ou não impossível não é a conversão que eu peço basta-me que seja menos indiferente e mais compassivo mas que pretendes fazer perguntou adelaide sentindo que as lágrimas também lhe rebentavam dos olhos houve alguns instantes de silêncio mas o que tu não sabes continuou emília é que ele não é para mim um simples

NOTE: this pt_pt example consists of multiple speakers. |

|

|

MLS input

|

Miipher-2 (ours)

|

|---|---|

|

|

FLEURS (unknown languages)

This section shows samples of the objective experiments on FLEURS[6], which has low-resource languages unavailable in our finetuning dataset. We selected one locales per FLEURS language categories except for CJK locales, which were fully covered by our finetuning data. Comparing to MLS, its samples are much more noisy and reverberant but Miipher-2 can somehow restore clean speech with subtle noise.

| Example ca_es 001633443218971: Això sembla raonable perquè no tenim pas la sensació que la Terra s'estigui movent o sí | |

|

FLEURS input

|

Miipher-2 (ours)

|

|---|---|

|

|

| Example ru_ru 001633540429756: Их термические характеристики не такие стабильные как у больших пещер на Земле которые часто поддерживают почти постоянную температуру но они соответствуют тому что является глубокими ямами в грунте — сообщил Глен Кушинг из отдела планетной геологии Геологической службы США и Университета Северной Аризоны расположенного во Флагстаффе Аризона | |

|

FLEURS input

|

Miipher-2 (ours)

|

|---|---|

|

|

| Example ur_pk 001633351247178: سندربن دنیا کا سب سے بڑا ساحلی مینگروو بیلٹ ہے جو ساحلی علاقے سے بنگلادیشی اور ہندوستان کے دور افتادہ پسماندہ علاقے میں 80 کیلو میٹر 50 میل تک پھیلا ہوا ہے | |

|

FLEURS input

|

Miipher-2 (ours)

|

|---|---|

|

|

| Example sw_ke 001634489347424: Unaweza kutumia boda-boda teksi ya pikipiki kuzunguka Goma Nauli ya kawaida kwa wenyeji ni Franki 500 za Kongo kwa safari fupi | |

|

FLEURS input

|

Miipher-2 (ours)

|

|---|---|

|

|

| Example mi_nz 001635418752917: Nā Hong Kong Island te ingoa o te rohe o Hong Kong ā koirā te wāhi ka whakaarohia e ngā tini tūruhi hei aronga matua | |

|

FLEURS input

|

Miipher-2 (ours)

|

|---|---|

|

|

Acknowledgement

We appreciate valuable feedback and support from Keisuke Kinoshita, Bhuvana Ramabhadran, Richard William Sproat, Yotaro Kubo, Wataru Nakata.

References

[1] H. Zen et al., "LibriTTS: A Corpus Derived from LibriSpeech for Text-to-Speech," Proc. Interspeech 2019, doi: 10.21437/Interspeech.2019-2441 http://www.openslr.org/60/

[2] K. Saijo et al., "TF-GridNet baseline", Interspeech URGENT 2025 challenge https://huggingface.co/kohei0209/tfgridnet_urgent25

[3] Y. Koizumi et al., "Miipher: A Robust Speech Restoration Model Integrating Self-Supervised Speech and Text Representations," 2023 IEEE Workshop on Applications of Signal Processing to Audio and Acoustics (WASPAA), doi: 10.1109/WASPAA58266.2023.10248089. https://arxiv.org/abs/2303.01664

[4] Y. Koizumi et al., "LibriTTS-R: A Restored Multi-Speaker Text-to-Speech Corpus," Proc. Interspeech 2023, doi: 10.21437/Interspeech.2023-1584 https://www.openslr.org/141/

[5] V. Pratap et al., "MLS: A Large-Scale Multilingual Dataset for Speech Research," Proc. Interspeech 2020, doi: 10.21437/Interspeech.2020-2826 https://www.openslr.org/94/

[6] A. Conneau et al., "FLEURS: FEW-Shot Learning Evaluation of Universal Representations of Speech," 2022 IEEE Spoken Language Technology Workshop (SLT), pp. 798-805, doi: 10.1109/SLT54892.2023.10023141. https://huggingface.co/datasets/google/fleurs