Fuzz target generation using LLMs

Background

OSS-Fuzz performs continuous fuzzing of 1000+ open source projects across most major languages. To integrate a new project, a human typically analyzes the attack surface of a library and writes fuzz targets (also called fuzzing harnesses) to exercise the relevant code. Linked with a fuzzing engine (e.g. libFuzzer, AFL, Centipede), this enables coverage-guided fuzzing for all OSS-Fuzz projects. Depending on the complexity of the project, writing fuzz targets typically requires several hours of manual work and sufficient background knowledge of the project.

Additionally, the main challenge for most integrated OSS-Fuzz projects is ensuring high code coverage. Most OSS-Fuzz projects have fairly low runtime coverage (~30%) despite millions of hours of CPU time. This means we are not finding vulnerabilities in approximately 70% of each project that we’re fuzzing. Our preliminary research found that many fuzz blockers (as determined by FuzzIntrospector) are because of deficiencies in existing targets, rather than deficiencies in fuzzing engines.

Generating fuzz targets via LLMs can reduce the manual effort required to more thoroughly fuzz existing projects in OSS-Fuzz as well as integrating new projects into OSS-Fuzz.

Goals

Our ideal end state of this research is to use LLMs for two use cases:

- Completely automatic fuzz target generation (or modification of existing targets) for existing OSS-Fuzz projects to unblock fuzz blockers and increase project code coverage (and bugs found) for free.

- Completely automatic fuzz target generation for completely new OSS-Fuzz projects. This is much more challenging than 1, and is an extension of it.

Our current experiments focus on the first use case for C/C++ projects. This report serves as a preliminary investigation into how effective LLMs are for this use case. More detailed results and the experimentation framework for our research will be published at a later date.

Experiment framework

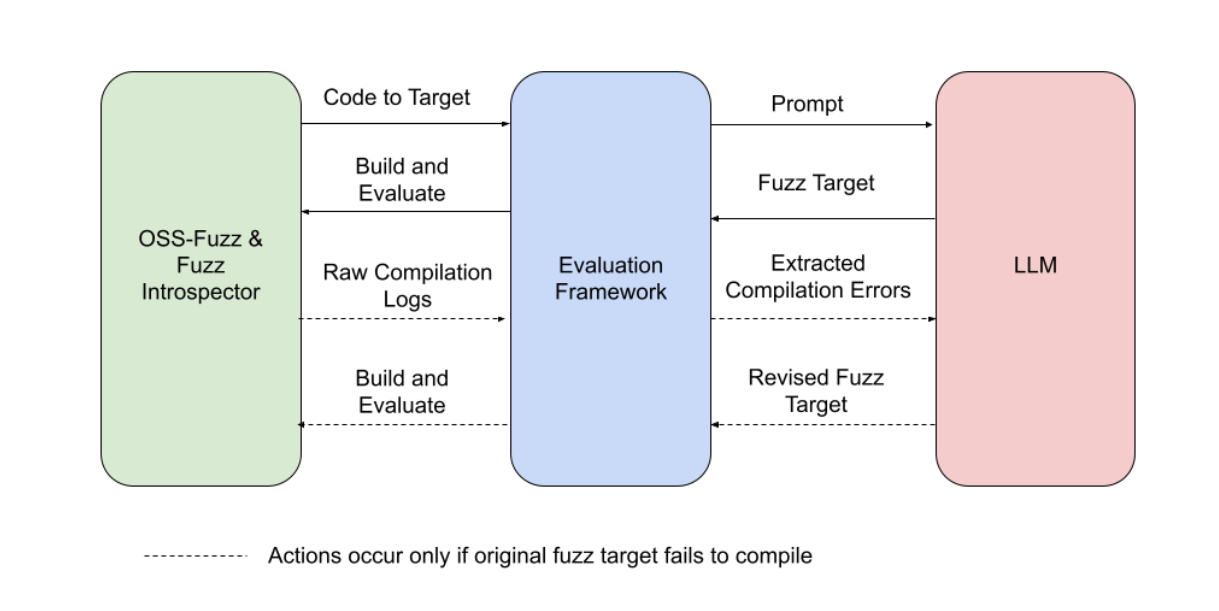

To discover whether an LLM could successfully write new fuzz targets, we built an evaluation framework that connects OSS-Fuzz to Google’s LLMs, conducts the experiment, and evaluates the results. The steps look like this:

- OSS-Fuzz’s Fuzz Introspector tool identifies an under-fuzzed, high-potential, portion of the target project’s code and passes the code to the evaluation framework.

- The evaluation framework creates a prompt that the LLM will use to write the new fuzz target. The prompt includes project specific information.

- The evaluation framework takes the fuzz target generated by the LLM and runs the new target.

- The evaluation framework observes the run for any change in code coverage or crashes.

- In the event that the fuzz target fails to compile, the evaluation framework prompts the LLM to write a revised fuzz target that addresses the compilation errors.

1. Identifying high potential portions of the project’s code

We leverage Fuzz Introspector (example JSON endpoint) to provide us with a list of functions with low runtime coverage (but high potential to reach more code coverage). These are turned into benchmark YAML files, which consist of an OSS-Fuzz project, and a list of function signatures to generate new targets for.

We have started with a small set of benchmarks, and will gradually scale this to larger, automated sets of benchmarks taken from all of OSS-Fuzz as we improve the function selection and prompt generation process.

Example benchmark (YAML):

functions:

- XML_Parser XMLCALL XML_ExternalEntityParserCreate(XML_Parser oldParser, const XML_Char

*context, const XML_Char *encodingName)

- XML_Parser XMLCALL XML_ParserCreateNS(const XML_Char *encodingName, XML_Char nsSep)

- XML_Bool XMLCALL XML_ParserReset(XML_Parser parser, const XML_Char *encodingName)

- static enum XML_Error PTRCALL externalParEntInitProcessor(XML_Parser parser, const

char *s, const char *end, const char **nextPtr)

project: expat

target_path: /src/expat/expat/fuzz/xml_parse_fuzzer.c

target_name: xml_parse_fuzzer_UTF-8

2. Prompt generation

We dynamically generate a prompt based on a template (example).

As part of our experimentation, we tried various different prompt approaches. So far, the best results have come from including:

- One example of an existing function signature and fuzz target from the project under test, formatted into problem and solution structure. Too many examples yields worse results.

- Two examples from other projects in OSS-Fuzz, formatted in the same way.

- Examples of how to leverage FuzzedDataProvider to generate inputs for function arguments.

- A priming that gives the task context.

- Examples of code anti-patterns to avoid.

The dynamically generated sections today include examples of existing fuzz targets from both other projects on OSS-Fuzz as well as one example from the project under test. We have other unexplored ideas including more structured information about the function under test, such as:

- Relevant data structure definitions

- Function implementations of the function under test and related functions

- Usages of the function under test and related functions

3. Build and run

We leverage the OSS-Fuzz build infrastructure to build new targets by replacing an existing target’s source code with the newly generated target source code.

OSS-Fuzz projects often have strict compiler flags on by default. To make compilation easier, we also implemented a compiler wrapper that:

- Turns off compiler warnings to prevent trivial issues such as missing pointer casts from blocking compilation.

- Re-compiles targets as C++ (to leverage FuzzedDataProvider).

4. Measuring quality of generated targets

An important part of our research is to define metrics to measure the quality of generated targets.

These metrics are:

- Syntax correctness and project consistency. This is measured by its compilation result. For example: whether it compiles successfully, does it call functions in the project correctly without hallucination.

- Whether it crashes instantly or within the fuzz target. This often means that there is some miscalled API and the crashes are likely to be false positives.

- New code coverage. This is measured by the new lines it covered compared to all existing targets in OSS-Fuzz for the same project.

All of these metrics can be automatically computed for a given generated target.

5. LLM Code Fixer

The fuzz targets generated by LLM often contain various trivial defects, which can be fixed by a separate LLM query.

The prompt of the code fixing query is structured as follows, where the raw code and error are respectively replaced with the fuzz target source code generated by the LLM and the build error messages extracted from pages of build logs:

Given the following code and its build error message, fix the code without affecting its functionality.

First explain the reason, then output the whole fixed code.

If a function is missing, fix it by including the related libraries.

Code:

```

{raw_code}

```

Build error message:

```

{error}

```

Fixed code:

Several rounds of code fixing queries are required for some cases. For example, when multiple defects incurs several error messages, sometimes LLM tends to only fix one of them at a time. Similarly, new defects may be introduced during code fixing. In these cases, we found iteratively querying LLM with the same prompt structure will gradually fix all errors.

LLM often proposes several responses for each query, we prefer the one with the longest code. This is an implementation decision to avoid a quadratically increasing number of targets to build (e.g. the LLMs could propose 4 new targets across N iterations) and to avoid the LLM deleting the function code to fix build failures.

Additionally, we also check that the generated target includes a call to the requested function to test. If it does not, this is surfaced as an error to the LLM.

Example

Prompt: Incorrect target with missing arguments passed to target function.

After fix: Correct function argument added.

Results

Initially, getting any compilable output was a challenge. We were able to improve this via prompt engineering and our compiler wrapper to having 14/31 tested OSS-Fuzz projects successfully compile new targets and increase coverage. The successful examples and prompts are published here.

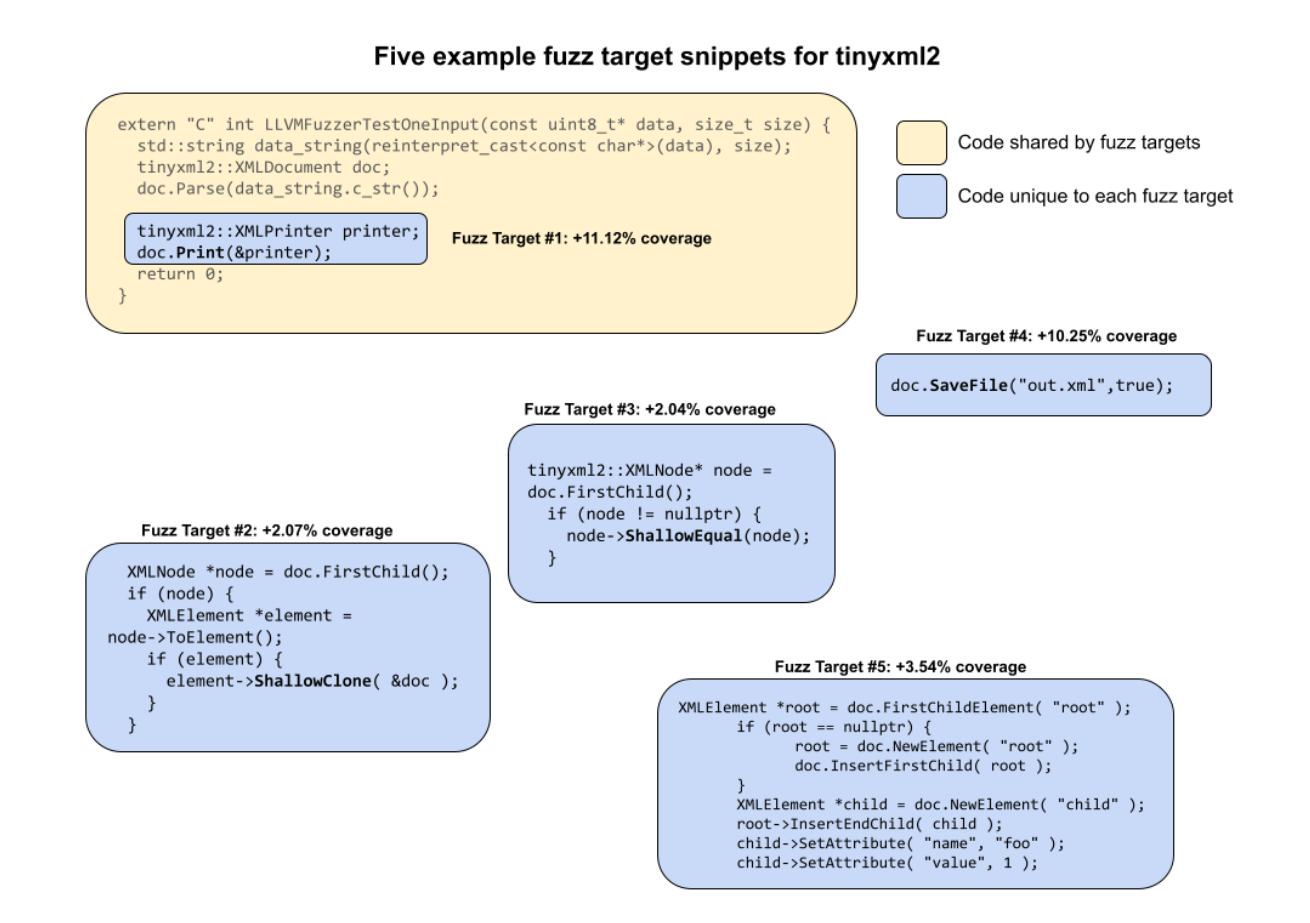

We see a wide range of coverage improvements from 0-31% code coverage increases.

The top coverage increases, aggregated across all benchmarks per OSS-Fuzz project are:

| tinyxml2 | 31% |

| cjson | 6% |

| expat | 4% |

| libplist | 4% |

| libxml2 | 1% |

| elfutils | 1% |

The best result we’ve had is with the TinyXML2 project, where we managed to increase fuzz coverage from 38% line coverage to 69% line coverage without any interventions.

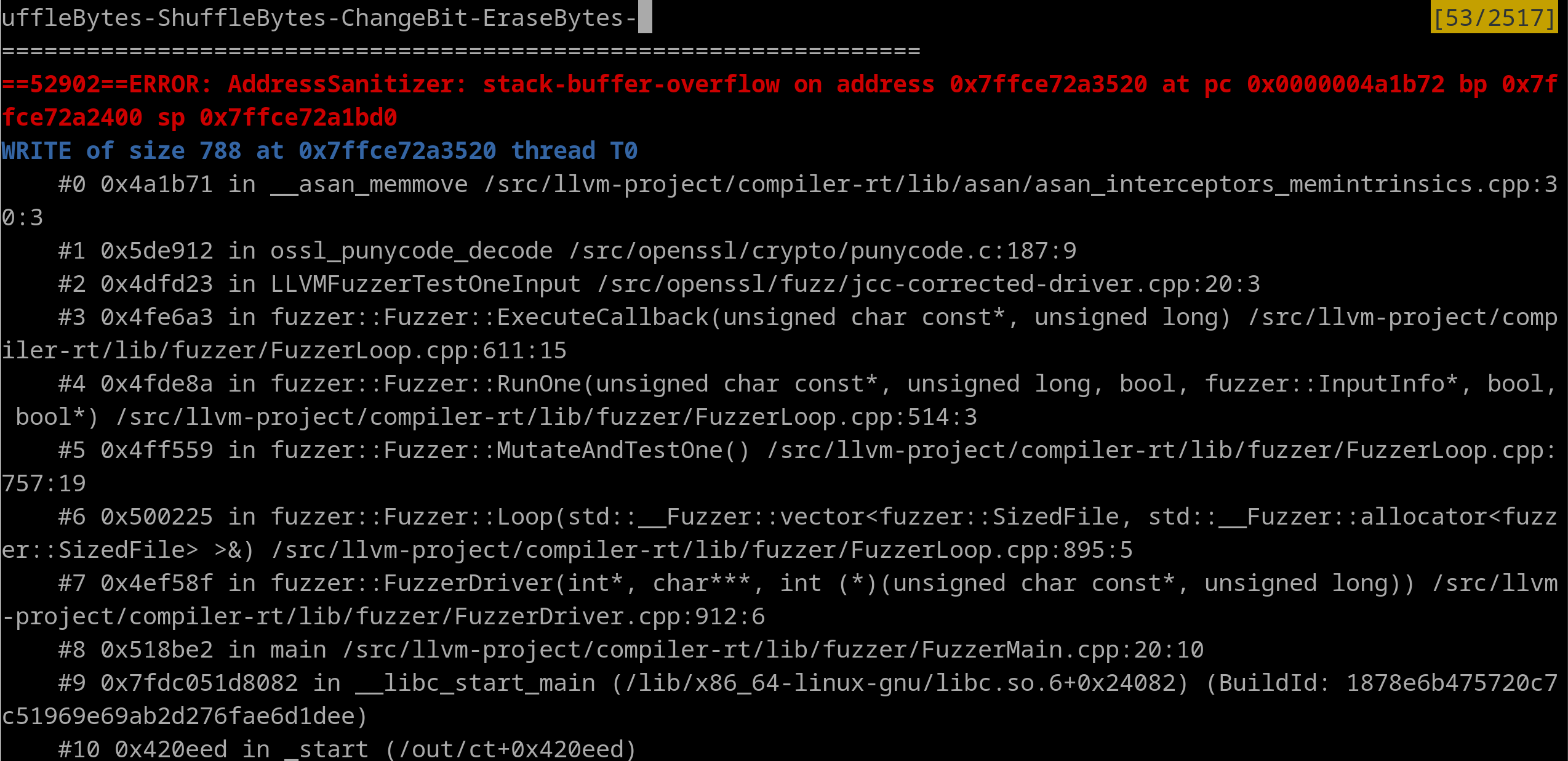

Additionally, we targeted OpenSSL from the perspective of discovering past vulnerabilities that were not found due to lack of fuzzing coverage. We were able to replicate a similar fuzz target that rediscovered CVE-2022-3602.

Future work

We’ve seen very promising early results in this space and will continue our research.

1. Continue research

There are a number of areas we’d like to further research on:

- Expand benchmarks to all of OSS-Fuzz. We’d like to expand the set of benchmarks to cover all of OSS-Fuzz.

- Continued prompt engineering and experimentation with project-specific context, such as more structured context (e.g. structure definitions, implementations) around the relevant function to test.

- Model fine-tuning

- Expand to other languages beyond C/C++

- Expand research to completely new projects with no existing OSS-Fuzz integration.

2. Open source evaluation framework

We plan to open source the evaluation framework we’ve built to help test arbitrary auto-fuzz target generation capabilities. We hope that OSS-Fuzz can serve as a valuable benchmarking platform for researchers in this space.

3. OSS-Fuzz integration

Ultimately, the goal is to integrate the results of this research into OSS-Fuzz, to provide:

- Free coverage increases for existing projects

- Automated onboarding of new projects, and tools to help maintainers write manual fuzz targets.

Appendix

Successful benchmark results

| Project | Function | Output | Build rate | Max Coverage | Max Line coverage diff | Reports |

| tinyxml2 | tinyxml2-xmldocument-print | Prompt; Fixes; Targets. | 50 | 29.74 | 11.16 | Reports |

| tinyxml2 | tinyxml2-xmldocument-deepcopy | Prompt; Fixes; Targets. | 25 | 26.8 | 4.45 | Reports |

| tinyxml2 | tinyxml2-xmlelement-setattribute | Prompt; Fixes; Targets. | 75 | 26.08 | 3.77 | Reports |

| libplist | plist_print | Prompt; Fixes; Targets. | 25 | 12.88 | 3.42 | Reports |

| tinyxml2 | tinyxml2-xmlelement-doubletext | Prompt; Fixes; Targets. | 62.5 | 25.61 | 3.28 | Reports |

| tinyxml2 | tinyxml2-xmlelement-booltext | Prompt; Fixes; Targets. | 87.5 | 26.18 | 2.9 | Reports |

| tinyxml2 | tinyxml2-xmlelement-insertnewunknown | Prompt; Fixes; Targets. | 25 | 25.67 | 2.64 | Reports |

| tinyxml2 | tinyxml2-xmlelement-int64text | Prompt; Fixes; Targets. | 87.5 | 25.91 | 2.64 | Reports |

| cjson | cjson_compare | Prompt; Fixes; Targets. | 75 | 29.68 | 2.47 | Reports |

| tinyxml2 | tinyxml2-xmlelement-floattext | Prompt; Fixes; Targets. | 62.5 | 25.09 | 2.45 | Reports |

| tinyxml2 | tinyxml2-xmlelement-inttext | Prompt; Fixes; Targets. | 75 | 26.2 | 2.41 | Reports |

| tinyxml2 | tinyxml2-xmlelement-unsigned64text | Prompt; Fixes; Targets. | 37.5 | 25.74 | 2.22 | Reports |

| tinyobjloader | tinyobj-objreader-parsefromfile | Prompt; Fixes; Targets. | 37.5 | 5.7 | 2.16 | Reports |

| tinyxml2 | tinyxml2-xmlelement-unsignedtext | Prompt; Fixes; Targets. | 50 | 25.53 | 2.15 | Reports |

| tinyxml2 | tinyxml2-xmlelement-shallowclone | Prompt; Fixes; Targets. | 50 | 25.03 | 2.07 | Reports |

| cjson | cjson_replaceiteminobject | Prompt; Fixes; Targets. | 37.5 | 27.56 | 1.98 | Reports |

| tinyxml2 | tinyxml2-xmlelement-gettext | Prompt; Fixes; Targets. | 37.5 | 25.42 | 1.96 | Reports |

| tinyxml2 | tinyxml2-xmlelement-shallowequal | Prompt; Fixes; Targets. | 62.5 | 25.58 | 1.96 | Reports |

| cjson | cjson_duplicate | Prompt; Fixes; Targets. | 62.5 | 27.4 | 1.89 | Reports |

| expat | xml_externalentityparsercreate | Prompt; Fixes; Targets. | 12.5 | 1.25 | 1.88 | Reports |

| cjson | cjson_replaceiteminobjectcasesensitive | Prompt; Fixes; Targets. | 87.5 | 25.54 | 1.85 | Reports |

| tinyxml2 | tinyxml2-xmlelement-insertnewcomment | Prompt; Fixes; Targets. | 62.5 | 25.61 | 1.85 | Reports |

| expat | xml_parsercreatens | Prompt; Fixes; Targets. | 12.5 | 45.6 | 1.84 | Reports |

| tinyxml2 | tinyxml2-xmlelement-deleteattribute | Prompt; Fixes; Targets. | 50 | 25.73 | 1.7 | Reports |

| tinyxml2 | tinyxml2-xmlelement-insertnewdeclaration | Prompt; Fixes; Targets. | 50 | 25 | 1.7 | Reports |

| tinyxml2 | tinyxml2-xmlelement-insertnewchildelement | Prompt; Fixes; Targets. | 50 | 24.88 | 1.51 | Reports |

| tinyxml2 | tinyxml2-xmlnode-previoussiblingelement | Prompt; Fixes; Targets. | 87.5 | 26.08 | 1.43 | Reports |

| tinyobjloader | tinyobj-loadobj | Prompt; Fixes; Targets. | 25 | 4.33 | 1.35 | Reports |

| tinyobjloader | tinyobj-material_t-material_t | Prompt; Fixes; Targets. | 25 | 23.91 | 1.35 | Reports |

| tinyxml2 | tinyxml2-xmlelement-insertnewtext | Prompt; Fixes; Targets. | 62.5 | 24.42 | 1.32 | Reports |

| elfutils | dwfl_module_relocate_address | Prompt; Fixes; Targets. | 87.5 | 7.81 | 1.1 | Reports |

| libxml2 | xmlschemavalidatefile | Prompt; Fixes; Targets. | 50 | 4.18 | 0.93 | Reports |

| tinyxml2 | tinyxml2-xmldocument-loadfile | Prompt; Fixes; Targets. | 25 | 2.17 | 0.9 | Reports |

| speex | ogg_stream_packetin | Prompt; Fixes; Targets. | 25 | 8.06 | 0.55 | Reports |

| libxml2 | xmltextreadersetschema | Prompt; Fixes; Targets. | 37.5 | 1.44 | 0.48 | Reports |

| libxml2 | xmltextreaderschemavalidate | Prompt; Fixes; Targets. | 37.5 | 4.03 | 0.36 | Reports |

| libplist | plist_dict_merge | Prompt; Fixes; Targets. | 12.5 | 0.8 | 0.35 | Reports |

| libxml2 | xmltextreaderschemavalidatectxt | Prompt; Fixes; Targets. | 25 | 1.19 | 0.33 | Reports |

| cjson | cjson_printpreallocated | Prompt; Fixes; Targets. | 75 | 26.44 | 0.22 | Reports |

| libucl | ucl_parser_insert_chunk | Prompt; Fixes; Targets. | 25 | 10.5 | 0.22 | Reports |

| libucl | ucl_object_compare | Prompt; Fixes; Targets. | 12.5 | 1.35 | 0.21 | Reports |

| elfutils | dwarf_getlocations | Prompt; Fixes; Targets. | 62.5 | 7.22 | 0.2 | Reports |

| libucl | ucl_parser_add_fd_priority | Prompt; Fixes; Targets. | 71.43 | 19.61 | 0.18 | Reports |

| jsoncpp | json-value-resize | Prompt; Fixes; Targets. | 37.5 | 2.23 | 0.17 | Reports |

| mosquitto | mosquitto_topic_matches_sub2 | Prompt; Fixes; Targets. | 87.5 | 28.28 | 0.16 | Reports |

| xvid | xvid_encore | Prompt; Fixes; Targets. | 31.25 | 0.22 | 0.16 | Reports |

| cjson | cjson_parse | Prompt; Fixes; Targets. | 75 | 25.72 | 0.13 | Reports |

| cjson | cjson_parsewithlength | Prompt; Fixes; Targets. | 62.5 | 25.72 | 0.13 | Reports |

| jsoncpp | json-value-removeindex | Prompt; Fixes; Targets. | 25 | 5.11 | 0.13 | Reports |

| libucl | ucl_object_merge | Prompt; Fixes; Targets. | 25 | 0.65 | 0.12 | Reports |

| speex | ogg_stream_pageout_fill | Prompt; Fixes; Targets. | 6.25 | 0 | 0.07 | Reports |

| libsndfile | sf_command | Prompt; Fixes; Targets. | 25 | 3.41 | 0.06 | Reports |

| mosquitto | mosquitto_topic_matches_sub | Prompt; Fixes; Targets. | 62.5 | 4.03 | 0.05 | Reports |

| libsndfile | sf_format_check | Prompt; Fixes; Targets. | 12.5 | 0.1 | 0.04 | Reports |

| libucl | ucl_object_replace_key | Prompt; Fixes; Targets. | 50 | 7.14 | 0.04 | Reports |

| libdwarf | dwarf_find_die_given_sig8 | Prompt; Fixes; Targets. | 37.5 | 11.62 | 0.01 | Reports |